import matplotlib.pyplot as plt

import seaborn as sns; sns.set()

import numpy as np

import pandas as pd

from random import gauss

from random import random

from numpy.linalg import inv

!pip install pyunfold

from pyunfold import iterative_unfold

from pyunfold.callbacks import Logger

import matplotlib.cm as cm

import scipy as sp

Convolution & Response#

Convolution#

A convolution is an operation that takes place on two functions, \(f\) and \(g\), producing a third function \(f * g\): $\((f * g)(t) = \int_{-\infty}^{\infty} f(\tau) g(t-\tau) d\tau\)$ Intuitively, this involves flipping one of the functions and sliding it past the other. The value of the convlution at a particular point is then the integral of the overlap of the two functions.

(image from wikipedia)

There are a few properties of convolutions that are useful to know:

\((f * g) = (g *f)\), communtativity

\(f * (g * h) = (f * g) * h\), associativity

\(f * (g + h) = f * g + f * h\), distributivity

\( f * \delta = f\)

Convolutions show up in lots of places, particularly signal processing, responses, physics, etc. This is what is going on in kernel density estimation as well (from earlier in the semester).

Of course there are multiple implementations of convolutions in python (we’ll use the scipy one) but it is easy to lose intuition of what’s going on without working through some simple examples. (Due to the Convolution Theorem most implementations of convolutions in software employ FFT because the convolution of two functions is the product of their Fourier transforms.)

Here’s a simple way to think abou a discrete convolution. Let’s say that we have \(f = [1 2 3 4]\) and \(g = [1 2]\). In order to evaluate the convolution, we flip \(g\) and slide it across \(f\). At each step, we

take the product of the element of \(f\) and the element of \(g\) that are lined up. Those elements are summed together to create an element of the convolution.

Let’s verify that this is the same as one gets using scipy’s convolution function…

from scipy import signal

sig = ([1,2,3,4])

win = ([1,2])

convolution = signal.convolve(sig, win, mode='full')

print(convolution)

This looks like it perhaps should be related to matrix multiplication and it is. If you take one (it shouldn’t matter which) of the functions and convert it into a Toepliz matrix and multiply that times the other function you get the same answer.

Now we can more over to more complicated functions. Here is a \(\delta\) function convoluted with a Gaussian.

sig = np.repeat([0],100)

sig = np.append(sig,np.repeat([1],1))

sig = np.append(sig,np.repeat([0],100))

win = signal.windows.gaussian(60,10,)

filtered = signal.convolve(sig, win, mode='same') / sum(win)

fig, (ax_orig, ax_win, ax_filt) = plt.subplots(3, 1, sharex=True)

ax_orig.plot(sig)

ax_orig.set_title('Original pulse')

ax_orig.margins(0, 0.1)

ax_win.plot(win)

ax_win.set_title('kernel')

ax_win.margins(0, 0.1)

ax_filt.plot(filtered)

ax_filt.set_title('convolution')

ax_filt.margins(0, 0.1)

fig.tight_layout()

Broadening this out to a wider flat distribution…

sig = np.repeat([0],100)

sig = np.append(sig,np.repeat([1],50))

sig = np.append(sig,np.repeat([0],100))

win = signal.windows.gaussian(60,10,)

filtered = signal.convolve(sig, win, mode='same') / sum(win)

fig, (ax_orig, ax_win, ax_filt) = plt.subplots(3, 1, sharex=True)

ax_orig.plot(sig)

ax_orig.set_title('Original pulse')

ax_orig.margins(0, 0.1)

ax_win.plot(win)

ax_win.set_title('Filter impulse response')

ax_win.margins(0, 0.1)

ax_filt.plot(filtered)

ax_filt.set_title('Filtered signal')

ax_filt.margins(0, 0.1)

fig.tight_layout()

And an even wider pulse…

sig = np.repeat([0],100)

sig = np.append(sig,np.repeat([1],50))

sig = np.append(sig,np.repeat([0],100))

win = signal.windows.gaussian(60,10,)

filtered = signal.convolve(sig, win, mode='same') / sum(win)

fig, (ax_orig, ax_win, ax_filt) = plt.subplots(3, 1, sharex=True)

ax_orig.plot(sig)

ax_orig.set_title('Original pulse')

ax_orig.margins(0, 0.1)

ax_win.plot(win)

ax_win.set_title('Filter impulse response')

ax_win.margins(0, 0.1)

ax_filt.plot(filtered)

ax_filt.set_title('Filtered signal')

ax_filt.margins(0, 0.1)

fig.tight_layout()

fig.show()

And something that stair-steps…

sig = np.repeat([0],100)

sig = np.append(sig,np.repeat([1],20))

sig = np.append(sig,np.repeat([0.5],20))

sig = np.append(sig,np.repeat([0],100))

win = signal.windows.gaussian(60,10,)

filtered = signal.convolve(sig, win, mode='same') / sum(win)

fig, (ax_orig, ax_win, ax_filt) = plt.subplots(3, 1, sharex=True)

ax_orig.plot(sig)

ax_orig.set_title('Original pulse')

ax_orig.margins(0, 0.1)

ax_win.plot(win)

ax_win.set_title('Filter impulse response')

ax_win.margins(0, 0.1)

ax_filt.plot(filtered)

ax_filt.set_title('Filtered signal')

ax_filt.margins(0, 0.1)

fig.tight_layout()

fig.show()

It’s worth knowing that a Gaussian convoluted with a Gaussian remains a Gaussian.

import scipy.stats as stats

x = np.linspace(-4, 4, 100)

mean = 0

std_dev = 1

sig = stats.norm.pdf(x, mean, std_dev)

win = signal.windows.gaussian(20,10,)

filtered = signal.convolve(sig, win, mode='same') / sum(win)

fig, (ax_orig, ax_win, ax_filt) = plt.subplots(3, 1, sharex=True)

ax_orig.plot(sig)

ax_orig.set_title('Original pulse')

ax_orig.margins(0, 0.1)

ax_win.plot(win)

ax_win.set_title('Filter impulse response')

ax_win.margins(0, 0.1)

ax_filt.plot(filtered)

ax_filt.set_title('Filtered signal')

ax_filt.margins(0, 0.1)

fig.tight_layout()

fig.show()

We saw that convolving something with sharp edges with a Gaussian smooths out the edges, as we expect. One use for this is in image processing. Here we’ll create a 2D Gaussian and blur an image.

import os.path

import subprocess

def wget_data(url):

local_path='./'

subprocess.run(["wget", "-nc", "-P", local_path, url])

def locate_data(name, check_exists=True):

local_path='./'

path = os.path.join(local_path, name)

if check_exists and not os.path.exists(path):

raise RuxntimeError('No such data file: {}'.format(path))

return path

wget_data('https://raw.githubusercontent.com/illinois-dap/DataAnalysisForPhysicists/refs/heads/main/data/belt.png')

locate_data('belt.png')

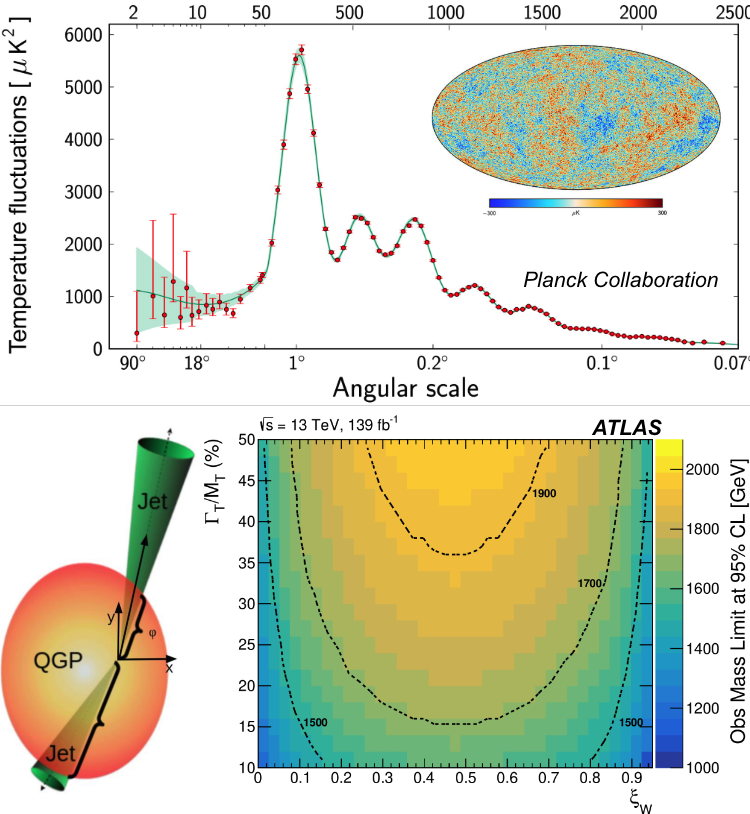

Just pulling out a graph from a couple of weeks ago to blur….

img = plt.imread("./belt.png")

plt.figure()

plt.grid(False)

plt.imshow(img)

t = np.linspace(-10, 10, 60)

sigma2 = 1 # defines the width of the Gaussian...

bump = np.exp(- t**2 /sigma2)

bump /= np.sum(bump)

kernel = bump[:, np.newaxis] * bump[np.newaxis, :] #2D

fig, ax = plt.subplots(1,2,figsize=(10, 5))

ax[0].step(t, bump, where='mid', lw=3,

alpha=0.7)

ax[0].set(xlabel='x', ylabel='amplitude')

ax[1].imshow(kernel)

plt.legend(loc='lower center')

plt.show()

#print(kernel)

And now we convolve the image with our kernel…. (test for yourself that changing the width changes the degree of blurring).

img3 = sp.signal.convolve(img, kernel[:, :, np.newaxis], mode="same")

plt.figure()

plt.grid(False)

plt.imshow(img3)

Response#

Measurement can be thought of as convolution of reality with the kernel defined by the detector. This kernel is called the detector response.

Your measurement isn’t perfect. For example:

there is a finite resolution to the detector. Often this will take the place of a Gaussian (or Gaussian-like) smearing around the true value.

your measurement could be systematically off in scale (e.g. your ruler is 0.5cm too long)

you can have an inefficiency in your detector either from the finite apature or some random loss.

These effects all go into the measurement response.

Every measurement suffers from this to some degree. The issue is that experiments with different responses can leave to different measurements.

Mathetmatically: $\(\vec{M} = \bf{R} \vec{T}\)\( If two experiments have different responses \)\bf{R_a}\( and \)\bf{R_b}\( but are measuring the same quantity \)\vec{T}\(, then \)\vec{M_a}\( and \)\vec{M_b}$ will be different.

\(\bf{R}\) is generally a matrix and it can contain:

inefficency of the detector

the inherent resolution of the measurement process

other effects

Determining the response#

The main way in which experimenters determine the response of a measurement is through simulations. Here the simulations model the interaction of a simulated singal (similar to what is expected for the true values) are input into a model of the detector and the expected output is given. This process is a Monte Carlo. Analytic determinations of detector response are typically not done because the detector should typically be simulated as exactly as possible.

This process can be incredibly detailed and usually requires more computing power than analyzing the data itself. In many parts of physics/medicine, Geant is used. This simulates the passage of particles and radiation through matter based on decades of measurements for specific materials and energies. Other examples are various ray tracking programs for optics. From this, it is possible to determine the response matrix.

Anything requiring a simulation is of course only as good as the simulation itself and all of these tools are imperfect to some degree. Usually experimenters try to do as many checks as possible of the simulation using data itself.

Incorporating the Response into Interpretation#

If the \(\bf{R}\) and \(\vec{M}\) are known then \(\vec{T}\) is constained. There is a choice as to whether to report \(\bf{R}\) and \(\vec{M}\) or to try to constrain \(\vec{T}\) itself. The advantage of reporting \(\bf{R}\) and \(\vec{M}\) is the simplicity. The disadvantage, clearly, is not reporting the actual quantity of interest \(\vec{T}\).

However, getting to \(\vec{T}\) can be a complicated process, as we’ll see below.

The most obvious way to get \(\vec{T}\) is to invert \(\bf{R}\) to get: $\(\bf{R^{-1}}\vec{M} = \bf{R^{-1}}\bf{R} \vec{T}\)\( \)\(\bf{R^{-1}}\vec{M} = \vec{T}\)$

We’ll consider that as a first step, and then discuss unfolding which is a more stable proceedure to get \(\vec{T}\) from \(\bf{R}\) and \(\vec{M}\).

First we’ll generate samples, num_samples, according to a distribution. This is what nature made for us.