Fitting, part 2#

Here we’ll look at some special situations in fitting

%pip install numba_stats

%pip install iminuit

%pip install jacobi

import iminuit

from iminuit import cost, Minuit

import numba_stats

from numba_stats import truncnorm, truncexpon, norm, expon

import numpy as np

from matplotlib import pyplot as plt

from scipy.stats import multivariate_normal as mvnorm

from jacobi import jacobi

from matplotlib import pyplot as plt

import numpy as np

from iminuit.cost import LeastSquares

import seaborn as sns; sns.set()

Fitting small datasets#

Let’s consider a very small dataset….Whereas before we had 1000 samples, now let’s only generate 100 (50 from the Gaussian and 50 from the exponential). Everything else is the same.

xr = (0, 2) # xrange

rng = np.random.default_rng(1)

xdata = rng.normal(1, 0.1, size=50)

ydata = rng.exponential(size=len(xdata))

xmix = np.append(xdata, ydata)

xmix = xmix[(xr[0] < xmix) & (xmix < xr[1])]

#np.histogram returns the values (n in this case) and the bin edges (xe in this case)

n, xe = np.histogram(xmix, bins=20, range=xr)

cx = 0.5 * (xe[1:] + xe[:-1])

dx = np.diff(xe)

#this plots "cx" which is the middle of every bin "xe" and n, the total counts in each bin along with the sqrt(n) as the error bar

plt.errorbar(cx, n, n ** 0.5, fmt="ok")

def pdf(x, z, mu, sigma, tau):

return (z * truncnorm.pdf(x, *xr, mu, sigma) +

(1 - z) * truncexpon.pdf(x, *xr, 0.0, tau))

c = cost.UnbinnedNLL(xmix, pdf)

m = Minuit(c, z=0.4, mu=1, sigma=0.2, tau=1)

m.limits["z"] = (0, 1)

m.limits["mu"] = (0, 2)

m.limits["sigma", "tau"] = (0, None)

m.migrad()

We’ve set the error bars on the histogram just to be the square root of the number of counts in each bin. That made sense when we had a lot of counts but here it’s not the right choice:

the error bars here for empty bins are shown as zero (since 0 is the sqrt of 0) and

1 for bins with a single count, but we know (from lecture X) that the probablity distribution is just a Poisson and so you should have asymmetric uncertainties here. We could make the bins wider so that we have a larger number of counts/bin and that would make the \(\sqrt{N}\) uncertainties correct, but we then lose information about where the points were.

However, this unbinned fit doesn’t care at all about the number of counts per bin because it doesn’t know about them; it just has the data itself, no binning.

def cdf(xe, z, mu, sigma, tau):

return (z * truncnorm.cdf(xe, *xr, mu, sigma) +

(1-z) * truncexpon.cdf(xe, *xr, 0, tau))

c = cost.BinnedNLL(n, xe, cdf)

m = Minuit(c, z=0.4, mu=0, sigma=0.2, tau=2)

m.limits["z"] = (0, 1)

m.limits["sigma", "tau"] = (0.01, None)

m.migrad()

Here’s the binned fit, but it doesn’t take in the errors, it just takes in the counts (n) and the bins (xe) so it doesn’t make an assumption about Gaussian uncertainties. Here it does very similar results between the binned and unbinned fits.

Let’s make another choice about the binning now; let’s go from 20 bins in the histogram to 10. Where the peak was about 2 bins wide before, now it should be about 1 bin wide. Everything else, including the underlying data, stays the same.

n_coarse, xe_coarse = np.histogram(xmix, bins=10, range=xr)

c = cost.BinnedNLL(n_coarse, xe_coarse, cdf)

m = Minuit(c, z=0.4, mu=0, sigma=0.2, tau=2)

m.limits["z"] = (0, 1)

m.limits["sigma", "tau"] = (0.01, None)

m.migrad()

Comparing the fit parameters, we see that the \(\sigma\) value is what has changed the most (by a factor of 2.5) and the \(\tau\) parameter has also changed a lot. \(\mu\) (the mean of the Gaussian) is pretty stable (unsurprisingly).

The lesson here is that you want as many bins as your data can support. In the limit of small statistics, an unbinned fit is best because all the available information about the dataset is contained in the fit.

Template Fitting#

In many situations, one wants to decompose a measured distribution to get a measurement for the fractional contributions of the various distributions. These fractional contributions often do not have the shape of some fundamental probability distribution (like a Gaussian or an exponential), but are rather measured or come from some Monte Carlo simulation.

In this case, instead of a PDF like above, the PDF is composed of fractional contributions to some number of components. This is template fitting.

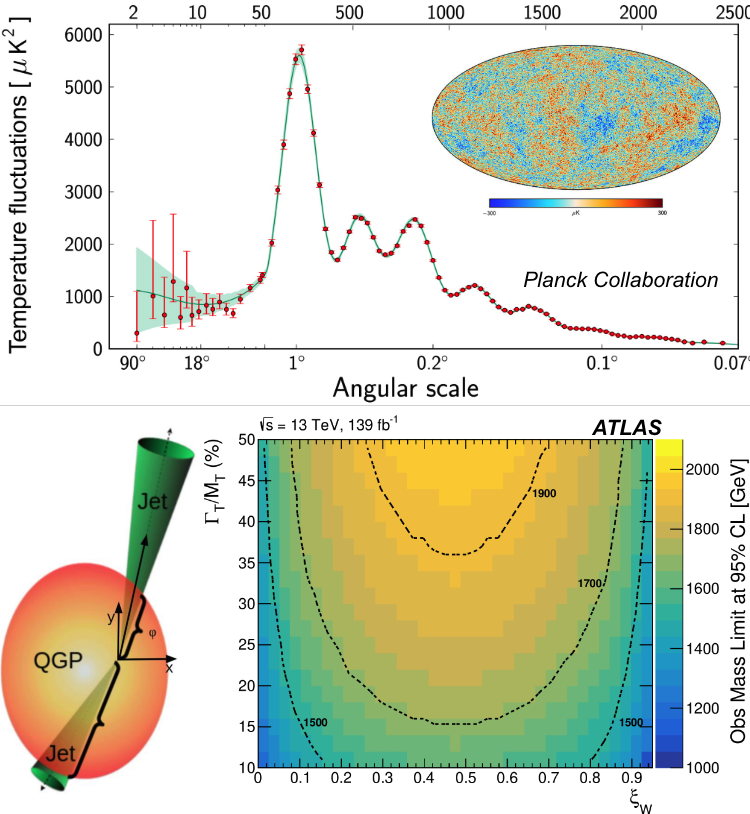

Here’s a direct example from this paper. Here there are jets coming from three kinds of quarks (light, charm and bottom) and a template for what kind of \(p_{T,rel}\) distribution each type of quark should produce. The measured data are fitted to a PDF based on the template:

Note, above there are three templates but only two fit parameters because the total integral of what is being fit is fixed.

%config InlineBackend.figure_formats = ['svg']

%pip install iminuit

from iminuit import Minuit

from iminuit.cost import poisson_chi2, Template

import numpy as np

from scipy.stats import norm, truncexpon

from scipy.optimize import minimize

import matplotlib.pyplot as plt

import scipy.stats

from scipy.stats import expon

from scipy.stats import norm

bins = 20

xe = np.linspace(0, 6, bins + 1)

data = np.append(expon.rvs(size=100),norm.rvs(size=100, loc = 3))

print(np.shape(data))

norm_data = norm.rvs(size=50, loc=3)

expon_data = expon.rvs(size=50)

data_hist, edges = np.histogram(data,xe)

print(np.shape(data_hist))

norm_template, edges = np.histogram(norm_data,xe)

expon_template, edges = np.histogram(expon_data,xe)

template = np.array([norm_template, expon_template])

print(np.shape(template))

fig, ax = plt.subplots(1, 3, figsize=(10, 4), sharex=True)

ax[0].stairs(data_hist, xe, fill=True)

ax[1].stairs(norm_template, xe, fill=True)

ax[2].stairs(expon_template, xe, fill=True)

def cost(yields):

mu = 0

for y, c in zip(yields, template):

mu += y * c / np.sum(c)

r = poisson_chi2(data_hist, mu)

return r

cost.errordef = Minuit.LEAST_SQUARES

cost.ndata = np.prod(data_hist.shape)

starts = np.ones(2)

m = Minuit(cost, starts)

m.limits = (0, None)

m.migrad()

m.hesse()

The precision of the templates affects the precision of the fit.

b = 1000

rng = np.random.default_rng(1)

pars = []

for ib in range(b):

ti = rng.poisson(template)

#vary the template to bootstrap the uncertainty

def cost(yields):

mu = 0

for y, c in zip(yields, ti):

mu += y * c / np.sum(c)

r = poisson_chi2(data_hist, mu)

return r

mi = Minuit(cost, m.values[:])

mi.errordef = Minuit.LEAST_SQUARES

mi.limits = (0, None)

mi.strategy = 0

mi.migrad()

assert mi.valid

pars.append(mi.values[:])

cov2 = np.cov(np.transpose(pars), ddof=1)

cov1 = m.covariance

for title, cov in zip(("data", "bootstrap", "fit+bootstrap"), (cov1, cov2, cov1 + cov2)):

print(title)

for label, p, e in zip(("b", "s"), m.values, np.diag(cov) ** 0.5):

print(f" {label} {p:.0f} +- {e:.0f}")

print(f" correlation {cov[0, 1] / np.prod(np.diag(cov)) ** 0.5:.2f}")

Simultaneous Fits#

Finally, there is no restriction that a single observable or dataset has to be fit independently of other observables or datasets. Very often a global minimum of many things is desired. Technically these require work to set up but the ideas build upon those outlined here.

A couple of examples:

this paper from LHCb testing lepton flavor universality fits four different masses from a control data set (see Fig. 1)

global fits are used in some areas of physics where data from many different experiments and observables is used to contrain something that is not observable directly itself. This is an example of a fit to parton distribution functions.

recently global fits have been coupled to ML. Here is an example of that applied to parton distribution functions.