import matplotlib.pyplot as plt

import numpy as np

import seaborn as sns; sns.set()

import pandas as pd

from matplotlib.patches import Ellipse

import matplotlib.transforms as transforms

from matplotlib import pyplot as mp

from scipy.stats import norm

Confidence from Data#

What did you measure? What does it mean? Here we will discuss using statistics to turn data into conclusions.

Anyone wants to be able to draw conclusions from data and that requires some statement about the probabilities associated with a given dataset. Here are some examples:

You have a diagonstic test and you want to be able to determine if an individual should be treated for a given condition.

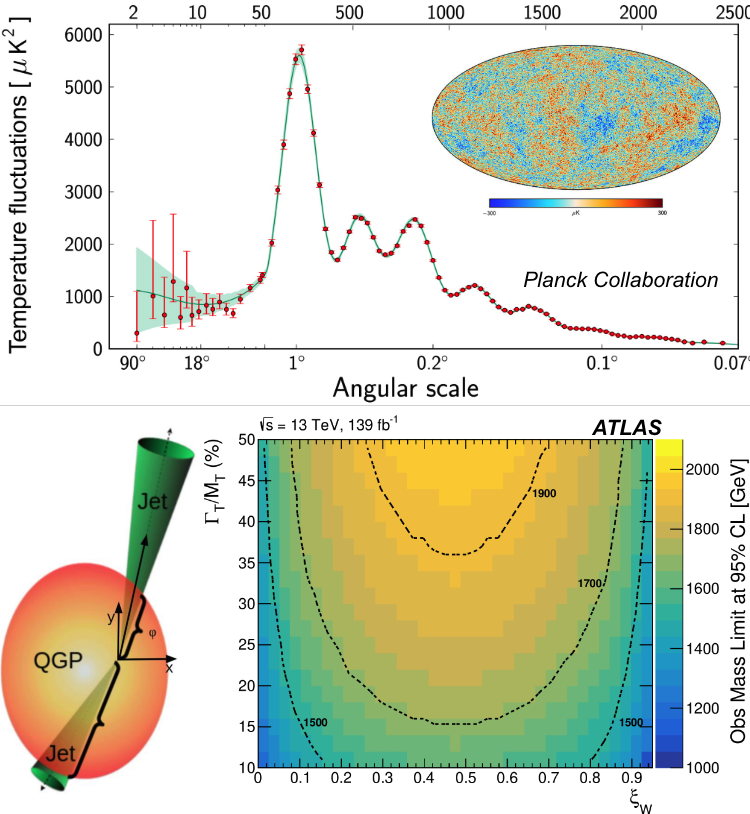

You have a dataset that seems to suggest you have found new physics beyond the standard model. You’d like to know if you can claim a discovery (and the associated Nobel prize) or if you should hold off until you have more data.

You are turning on a new particle collider and there are concerns that it would end the earth Will relativistic heavy-ion colliders destroy our planet?; Review of Speculative “Disaster Scenarios” at RHIC; disaster scenarios at the LHC

What are the chances that the Trinity Atomic bomb test would ignite the atmosphere? Washington Post summary

The last two are not generally quantitative probability statements, but I put them here to highlight the range of complication and importance in interpreting data. In all of these cases though, the probabilistic data is turned into an action: treatment of the condition, a claim of discovery, turning on the accelerator.

Confidence Limits#

In week 6, we discussed the Gaussian probability distribution (slides). There we looked at for a known probability distribution, what are the probabilities of having measurements at a given value.

Confidence Intervals from Data#

Let’s suppose you have an estimator of the quantity of interest, \(\hat{\theta}(x_1, x_2, ..., x_n)\) based on \(n\) measurements. Additionally, let’s say that you don’t know the true value \(\theta\) but for a given value of \(\theta\) you know what the pdf of \(\hat{\theta}\) would be, \(g(\hat{\theta};\theta)\) (let the cdf for this pdf be \(G(\hat{\theta};\theta)\)).

(the following discussion follows Cowan Chap. 9).

Now we can talk about the probabilities: $\(\alpha = P(\hat{\theta} ≥ u_{\alpha}(\theta))= \int_{u_{\alpha}(\theta)}^{\infty} g(\hat{\theta};\theta)d\hat{\theta}\)\( In words \)\alpha\( is the probability to observe \)\hat{\theta} > u_{\alpha}\(. On the other side of the probability distribution, we can define \)\(\beta = P(\hat{\theta} ≤ u_{\beta}(\theta))= \int_{-\infty}^{u_{\beta}(\theta)} g(\hat{\theta};\theta)d\hat{\theta}\)$

A few things to note:

The values \(u_{\alpha}(\theta)\) and \(u_{\beta}({\theta})\) depend on the true value \(\theta\) and the values of \(\alpha\) and \(\beta\) depdend on \(\hat{\theta}\) and \(\theta\).

The second integral is clearly just the cdf evaluated at \(u_{\beta}(\theta)\): \(\beta = P(\hat{\theta} ≤ u_{\beta}(\theta)) = G(u_{\beta};\theta)\)

The first integral can be written as \( \int_{u_{\alpha}(\theta)}^{\infty} g(\hat{\theta};\theta)d\hat{\theta} = \int_{-\infty}^{\infty} g(\hat{\theta};\theta)d\hat{\theta} - \int_{-\infty}^{u_{\alpha(\theta)}} g(\hat{\theta};\theta)d\hat{\theta} = 1 - G(u_{\alpha(\theta)}; \theta)\)

The probability that \(\hat{\theta}\) is outside the range given by \(u_{\alpha(\theta)}\) and \(u_{\beta(\theta)}\) is \(\alpha + \beta\) so the probability that it between this range is \(1 - \alpha - \beta\)

Recapping, we’ve shown is: $\(P(v_{\beta}(\theta) \le \hat{\theta} \le u_{\alpha}(\theta)) = 1 - \alpha - \beta\)\( However, we don't know what the true value of \)\theta$ actually is.

But what if we can come up with inverse functions such that \(\hat{\theta} \ge u_{\alpha}(\theta)\) implies \(a(\hat{\theta}) \ge \theta\) and likewise that \(\hat{\theta} \le v_{\beta}(\theta)\) implies \(b(\hat{\theta}) \le \theta\). This is a reasonable assumption.

Graphically, the choice of \(\alpha\) and \(\beta\) determines the \(u\) functions, then the intersection of \(\theta_{obs}\) with the \(u\) functions (the range between \(a\) and \(b\) determines the range of \(\theta_{true}\) satistifying the probability criteria. The range between \(a\) and \(b\) is the confidence interval at confidence level (or coverage probability) \(1 - \alpha -\beta\).

By having a value of \(\theta_{obs}\), we constrain, \(\theta_{true}\)

\(a\) is the hypothetical value for \(\theta_{true}\) for which a fraction \(\alpha\) of the estimates \(\hat{\theta}\) would be higher than \(\theta_{obs}\)

\(b\) is the hypothetical value for \(\theta_{true}\) for which a fraction \(\alpha\) of the estimates \(\hat{\theta}\) would be lower than \(\theta_{obs}\)

If the experiment is repeated \(a\) and \(b\) will change. We expect that the true value \(\theta_{true}\) will be included in the range between \(a\) and \(b\) in \(1 - \alpha -\beta\) of the experiments.

Graphically, we can see that in any scenario \(\theta_{obs} = u_{\alpha(\theta)} = u_{\beta(\theta)}\).

Going back to the beginning, we can rewrite:

and $\(\beta = \int_{-\infty}^{u_{\beta}(\theta)} g(\hat{\theta};\theta)d\hat{\theta} = \int_{-\infty}^{\theta_{obs}} g(\hat{\theta};b)d\hat{\theta} = G(\theta_{obs};b)\)$

Types Confidience Intervals

Central Confidence Interval this is when \(\alpha = \beta\)

limit this is a one sided confidence interval where \(\alpha\) or \(\beta\) is 0

Special cases

Gaussian PDFs#

As usual, it’s worth knowing what goes on in a Gaussian PDF. This stems from our discussion of the Central Limit Theorem (Week 3). For Gaussian pdfs, we know the coverage probability for central intervals.

1\(\sigma\) has a 68.3% coverage

2\(\sigma\) has 95.4% coverage

1.645\(\sigma\) has 90% coverage

Poisson PDFs#

Recall the Poisson PDF is written as: $\(f(k;\lambda) = \frac{e^{-\lambda} \lambda^k}{k!}\)\( where \)\lambda\( is the mean and variance of the distribution and \)k\( is an integer. \)\lambda$ can be any positive number.

The observed value is \(\lambda_{obs}\) which is an integer. This turns the equations for \(\alpha\) and \(\beta\) into sums: $\(\alpha = \int_{\theta_{obs}}^{\infty} g(\hat{\theta};a)d\hat{\theta} \)\( becomes: \)\(\alpha = \sum_{n = n_{obs}}^{\infty}f(n;a) = 1 - \sum_{n = 0}^{n_{obs}-1}f(n;a)\)\( and \)\(\beta = \int_{-\infty}^{\theta_{obs}} g(\hat{\theta};b)d\hat{\theta} \)\( becomes: \)\(\beta = \sum_{n = 0 }^{n_{obs}}f(n;b) \)$ and this is solvable because we know the pdf for a Poisson.

Interestingly, the sum $\(\sum_{n =0}^{n_{obs}} f(k;\lambda) = \sum_{n = 0 }^{n_{obs}} \frac{e^{-\lambda} \lambda^{k}}{k!} = \int_{2k}^{\infty} f_{\chi^2}(z;n_d = 2(n_{obs} + 1))dz\)\( where \)f_{\chi^2}(z;n_d)\( is the pdf for the \)\chi^2\( distribution. This relation can be used to get \)a\( and \)b\( for given values of \)\alpha\( and \)\beta$.

Limits Near Unphysical Boundaries What if you have measured something close to zero that can’t be negatve? Think about a yield of particles, a mass or a decay rate. Physics requires that these values be non-negative, however, depending on how your experiment is setup you can completely measure a central value that it is in the unphysical region.

For example, let’s say you measured \(m\) of a neutrino to be -0.4 eV with a 68% CL in the range of -0.6 to -0.2 eV (the current upper limit on the sum of all three neutrino masses is 0.8 eV at the 90% CL.

If you think you can’t measure a non-physical mass, consider a case where you measure \(E^2\) and \(p^2\) and then calculate \(m^2 = E^2 - p^2\). Depending on the setup getting a negative \(m^2\) is certainly possible. The same kind of thing can happen with rates or yields in cases where there is background that must be subtracted off.

First, don’t panic assuming that there is nothing wrong with the measurement itself, this is fine. In some fraction of the cases, the true value of what you’re trying to measure will be outside the confidence interval. If you have measured an unphysical value, the measured value and uncertainty should still be reported so that they can be combined/compared with other values (many of which will presumably be in the physical region).

Going back to the neutrino example, the entire 68% CL is in the unphysical region. We are clearly interested in reporting an upper limit in this case. \(1-\beta\) for a Gaussian at the 95% CL is 1.65\(\sigma\) which yields a 95% upper limit following the conventional procedure as -0.3 eV.

One might consider widening the CL to 99% upper limit (which is about 2.33\(\sigma\) for a Gaussian), then the upper limit on the mass is 0.07 eV. This finally includes some physical values. Here the issue is you are stating a very tight limit on the \(m\). A The resolution of your experiment is about 0.2 eV so it doesn’t seem like you should be able to place such tight limits on \(m\) given the measurement you are doing. Moreover, the vast majority of the distribution allowed in this case is in the unphysical region and that knowledge is no where reflected in what you’ve done.

One technique is to just shift the measurement into the physical region before calculating the upper limit. The advantage of this is that the limit will be in-line with the resolution of your measurement. The disadvantage is that you’ve lost the information from your measurement itself and the interpretation of the CL in terms of the probability described above no longer applies.

This scenario is actually very well suited to a Bayesian analysis. Going back to the likelihood function. Given the data \(x\) and a particular true value of the parameter, \(\theta\): \(L(x|\theta)\) is the likelihood function. We want, is the conditional pdf \(p(\theta|x)\) for \(\theta\) given the data \(x\). Recall Bayes theorem:

putting in the pdf we want for \(P(A|B)\) we get:

Great, except we need the prior probability \(\pi(\theta)\). The advantage of this is that we can incorporate our physical knowledge, in this case that the mass is non-negative, into \(\pi(\theta)\). We can then have a nice pdf \(p(\theta|x)\) with which to generate our CL as above. Because of the constraints on \(\pi(\theta)\) it will naturally be zero in the non-physical region.

However, what about the form of \(\pi(\theta)\) in the physical region. Here there is no way to procede without making an assumption. The simplest choice is to make it flat, e.g. \(\pi(\theta) = 1\) in the physical region and 0 outside of it. This might be a good choice but in our example (neutrinos) we know that the mass scales involved are small so we might choose something that incorporates that knowledge like \(\pi(\theta) = 1/\theta\) for positive \(\theta\) values.

No matter what you do, it should clearly be stated what was assumed and why. Exploring the sensitivity to the prior is often done in Bayesian analyses and is very informative.

Below is an illustration of what the 95% CL could look like under the defaul “classical” procedure, shifting the observed value to be in the physical region (“shifted”) and a Bayesian procedure (Fig from Cowan). For observed values in the physical region the three methods agree (as they should) but when the observations are in the unphysical region, they differ by quite a lot in many places.

Confidence Ellipses#

In the above, we were discussing single parameter constraints. However, in many cases one is trying to constrain two or more parameters at once. In that case you have confidence ellipses. In some cases, it’s possible to work out the math analytically, but in many cases, it’s the most useful to just calculate the correlation numerically. Here is a nice example of calculating and plotting those from the matplotlib documentation.

def confidence_ellipse(x, y, ax, n_std=3.0, facecolor='none', **kwargs):

"""

Create a plot of the covariance confidence ellipse of *x* and *y*.

Parameters

----------

x, y : array-like, shape (n, )

Input data.

ax : matplotlib.axes.Axes

The axes object to draw the ellipse into.

n_std : float

The number of standard deviations to determine the ellipse's radiuses.

**kwargs

Forwarded to `~matplotlib.patches.Ellipse`

Returns

-------

matplotlib.patches.Ellipse

"""

if x.size != y.size:

raise ValueError("x and y must be the same size")

cov = np.cov(x, y)

pearson = cov[0, 1]/np.sqrt(cov[0, 0] * cov[1, 1])

# Using a special case to obtain the eigenvalues of this

# two-dimensional dataset.

ell_radius_x = np.sqrt(1 + pearson)

ell_radius_y = np.sqrt(1 - pearson)

ellipse = Ellipse((0, 0), width=ell_radius_x * 2, height=ell_radius_y * 2,

facecolor=facecolor, **kwargs)

# Calculating the standard deviation of x from

# the squareroot of the variance and multiplying

# with the given number of standard deviations.

scale_x = np.sqrt(cov[0, 0]) * n_std

mean_x = np.mean(x)

# calculating the standard deviation of y ...

scale_y = np.sqrt(cov[1, 1]) * n_std

mean_y = np.mean(y)

transf = transforms.Affine2D() \

.rotate_deg(45) \

.scale(scale_x, scale_y) \

.translate(mean_x, mean_y)

ellipse.set_transform(transf + ax.transData)

return ax.add_patch(ellipse)

def get_correlated_dataset(n, dependency, mu, scale):

latent = np.random.randn(n, 2)

dependent = latent.dot(dependency) #multiplies the two matrices together

scaled = dependent * scale

scaled_with_offset = scaled + mu

# return x and y of the new, correlated dataset

return scaled_with_offset[:, 0], scaled_with_offset[:, 1], scaled_with_offset

np.random.seed(0)

PARAMETERS = {

'Positive correlation': [[0.85, 0.35],

[0.15, -0.65]],

'Negative correlation': [[0.9, -0.4],

[0.1, -0.6]],

'Weak correlation': [[1, 0],

[0, 1]],

}

mu = 2, 4

scale = 3, 5

fig, axs = plt.subplots(1, 3, figsize=(9, 3))

for ax, (title, dependency) in zip(axs, PARAMETERS.items()):

x, y, tmp = get_correlated_dataset(800, dependency, mu, scale)

ax.scatter(x, y, s=0.5)

ax.axvline(c='grey', lw=1)

ax.axhline(c='grey', lw=1)

confidence_ellipse(x, y, ax, edgecolor='red')

ax.scatter(mu[0], mu[1], c='red', s=3)

ax.set_title(title)

plt.show()

fig, ax_nstd = plt.subplots(figsize=(6, 6))

dependency_nstd = [[0.8, 0.75],

[-0.2, 0.35]]

mu = 0, 0

scale = 8, 5

ax_nstd.axvline(c='grey', lw=1)

ax_nstd.axhline(c='grey', lw=1)

x, y, line_data = get_correlated_dataset(500, dependency_nstd, mu, scale)

ax_nstd.scatter(x, y, s=0.5)

confidence_ellipse(x, y, ax_nstd, n_std=1,

label=r'$1\sigma$', edgecolor='firebrick')

confidence_ellipse(x, y, ax_nstd, n_std=2,

label=r'$2\sigma$', edgecolor='fuchsia', linestyle='--')

confidence_ellipse(x, y, ax_nstd, n_std=3,

label=r'$3\sigma$', edgecolor='blue', linestyle=':')

ax_nstd.scatter(mu[0], mu[1], c='red', s=3)

ax_nstd.set_title('Different standard deviations')

ax_nstd.legend()

plt.show()

#df = pd.DataFrame(data=line_data[1:,0:], columns=['x','y'])

#sns.jointplot(df,x='x',y='y');

Hypothesis Testing#

Now we want to make a statement. Have we discovered Dark Matter? Do neutrinos oscillate?

Here we are in the realm of hypothesis testing. You want to tell the difference between the null hypothesis and the alternative hypothesis.

In a straightforward example of the Higgs discovery, the null hypothesis could be the case where the there is no Higgs and the alternative hypothesis could be that there is a Higgs.

A simple hypothesis is one which is completely specified, whereas a composite hypothesis is one involving free parameters.

Let’s say we have some measurements \(x = x_1, x_2, ... x_n\) and two hypotheses we are trying to distinguish, \(H_0\) (the null hypothesis) and \(H_1\), the alternative hypothesis. There is a pdf associated with each hypothesis: \(f(x|H_0)\) and \(f(x|H_1)\). We don’t know which pdf representats nature. Figuring that out is the goal.

We’ll construct a test statistic \(t(x)\) which is a function of the measurements \(x\). We could just use the measurements themselves as \(t(x)\) (and in some cases it is done) but there is an advantage to having \(t(x)\) be a simpler (lower dimensional) variable than \(x\) (both \(t(x)\) and \(x\) can be multidimensional). There are pdfs associated with \(t(x)\) as well: \(g(t(x)|H_0)\) and \(g(t(x)|H_1)\).

The goal now is to decided where to optimally select \(t(x)\). Remember both \(g(t(x)|H_0)\) and \(g(t(x)|H_1)\) are pdfs and thus have a total integral of one. The goal is to select a value \(t_{cut}\) to optimally distinguish between \(H_{0}\) and \(H_{1}\).

Considerations#

It’s clear in the example above that selecting \(t_{cut} > 5\) to accept \(H_{1}\) would ensure that there was basically no chance \(H_{0}\) was true when \(H_{1}\) was accepted. However the downside of that is that about half the chances that \(H_{1}\) should be accepted will be excluded by that selection.

Quantifying this:

and $\(\beta = \int_{-\infty}^{t_{cut}} g(t|H_1)dt\)$

\(\alpha\) is the significance level of the test (in the above example \(t_{cut} > 5\) would have a significance level of just less than one.

\(1 - \beta\) is the power of the test; in our example above this is about 0.5.

These quantities highlight the trade offs in hypothesis esting. These are typically encapsulated into two types of errors.

Type 1 error: rejecting the null hypothesis when it is true. The probability of doing this is \(\alpha\). Think of this as a false positive.

Type 2 error: accepting the null hypothesis when it is false. The probability of doing this is \(\beta\). Think of this as a false negative.

The example of the Higgs discovery above minimizes the probability of Type 1 errors and accepts a higher probability of Type 2 errors.

null_mean = 0

null_width = 1

test_mean = 3

test_width = 2.5

fig, ax = plt.subplots(2)

mean, var, skew, kurt = norm.stats(loc=-10,moments='mvsk')

x = np.linspace(norm.ppf(0.01,loc=null_mean,scale=null_width),norm.ppf(0.999,loc=test_mean,scale=test_width), 200)

ax[0].plot(x, norm.pdf(x,loc=null_mean, scale=null_width), 'r-', lw=5, alpha=0.6, label='null pdf')

ax[0].set_title("null hypothesis")

ax[1].plot(x, norm.pdf(x,loc=test_mean, scale=test_width), 'r-', lw=5, alpha=0.6, label='signal pdf')

ax[1].set_title("alternative hypothesis")

m_critical = 2.5 # value at which you descriminate...

#the critical value determines alpha & beta

alpha= 1 - norm.cdf(m_critical,loc=null_mean, scale=null_width)

print("alpha:", alpha)

beta = norm.cdf(m_critical,loc=test_mean, scale=test_width)

print("beta:",beta)

m_critical_min = -2

m_critical_max = 4

values = np.linspace(m_critical_min,m_critical_max,num=100)

arr = np.empty((0,2))

for mc in values:

a1 = 1 - norm.cdf(mc,loc=null_mean, scale=null_width)

b1 = norm.cdf(mc,loc=test_mean, scale=test_width)

arr = np.append(arr,[[a1,b1]],axis=0)

fig1, a2d = plt.subplots(1)

a2d.plot(arr[:,0], arr[:,1], 'r-', lw=5, alpha=0.6, label='blah')

a2d.set_title("beta as a function of alpha")

If the relative expected rate of background compared to signal, \(R\), is small, then you might be able to accept a higher value of \(\beta\) because it is suppressed by the overall value small rate of background events.

Neyman-Pearson Lemma#

In a single dimension, there is one value of \(\beta\) for every value of \(\alpha\). If you go to a multidimensional case though, then how do you know you are getting the most powerful test. The answer is the Neyman-Pearson Lemma. This states that the most powerful test you can do is a ratio of the likelihood of the null hypothesis compared to the alternative hypothesis: $\(\frac{L(H_0)}{L(H_1)} > c\)$

where \(c\) is some value. If \(t\) is one dimensional, as above, then this is just the ratio of the pdfs.

If \(t\) is multidimensional, then this provides a way to construct the best possible test for a given value of \(\alpha\).

An example#

Let’s say you want to distinguish between two hypotheses, \(H_0\) and \(H_1\). In both cases, the pdf can be described as Gaussians with parameters \(\mu_0\), \(\sigma_0\) for \(H_0\) and \(\mu_1\) and \(\sigma_1\) for \(H_1\). You have \(n\) measurements to distingush between the hypothesis.

If \(n = 1\), then we just have something very similar to the plot above. You decide on the significance of the test, \(\alpha\) that you want and \(t_{cut}\) is determined.

Now let’s say \(n = 16\). You want one answer with a significance \(\alpha\). Do your data prefer \(H_0\) or \(H_1\). You have 16 data points and it’s very possible that, of those, some are on both sides of the \(t_{cut}\) value determined in the \(n=1\) case.

We know that we need to find the ratio of the likelihoods to get the best possible test. How does that work in practice?

Let’s call the individual data values \(X_1, X_2, ...X_{16}\). Let’s say these values have a mean \(\mu\) and variance 16.

What’s the best critical region, that is, find the most powerful test with a significance level of \(\alpha=0.05\) to test the simple null hypothesis \(H_0\) where \(\mu =\mu_0 = 10\) against the simple alternative hypothesis \(H_1\) where \(\mu = \mu_1= 15\)?

Since \(H_0\) and \(H_1\) are Gaussians, we know the pdfs. The normalization factors in front of the Gaussians drop out and we are left with: $\(t = \frac{exp(-\frac{1}{2{\sigma}^2}\sum_{i=1}^{N}(X_i-\mu_0)^2)}{exp(-\frac{1}{2{\sigma}^2}\sum_{i=1}^{N}(X_i-\mu_1)^2)}\)\( \)\(t = \frac{exp(-\frac{1}{32}\sum_{i=1}^{16}(X_i-10)^2)}{exp(-\frac{1}{32}\sum_{i=1}^{16}(X_i-15)^2)}\)\( (the likelihood of the probability of all 16 measurements is a product of the 16 individual probabilities). We want this ratio to be less than some value \)k$ (which we don’t yet know).

Simplifying the math… $\(exp(-\frac{1}{32}(\sum_{i=1}^{16}(X_i-10)^2 - \sum_{i=1}^{16}(X_i-15)^2)) < k\)\( and then expanding each sum: \)\(\sum_{i=1}^{16}(X_i-M)^2 = \sum_{i=1}^{16} X_i^2 - 2 \dot M \sum_{i=1}^{16} X_i + 16 M^2\)\( leaves \)\(exp(-\frac{1}{32}(-20\sum_{i=1}^{16}X_i +1600 +30 \sum_{i=1}^{16}X_i - 3600)) < k\)\( \)\(exp(-\frac{1}{32}(10\sum_{i=1}^{16}X_i -2000 )) < k\)\( finally: \)\(-10\sum_{i=1}^{16}X_i + 2000 < 32\ln k\)\( Also \)\(\mu = \sum_{i=1}^{16}X_i/16\)\( so now, \)\(\mu \ge -160(32\ln k - 2000)\)$

So now we just have to integrate the null hypothesis to find the value of \(k\). Let’s say we want \(\alpha = 0.05\). We want the 95% point on the cdf of a Gaussian centered at 10 with a width of \(\sigma / \sqrt{16} = 1\) (the variance and the sample size are both 16).

The value of the cut on \(\mu\) is then \(\kappa = 10 + 1.65 = 11.65\). Note that this value depends on the mean and variances of the null hypothesis and the number of samples in your measurement.