import matplotlib.pyplot as plt

import seaborn as sns; sns.set()

import numpy as np

import pandas as pd

from random import gauss

from random import random

from numpy.linalg import inv

!pip install pyunfold

from pyunfold import iterative_unfold

from pyunfold.callbacks import Logger

import matplotlib.cm as cm

import scipy as sp

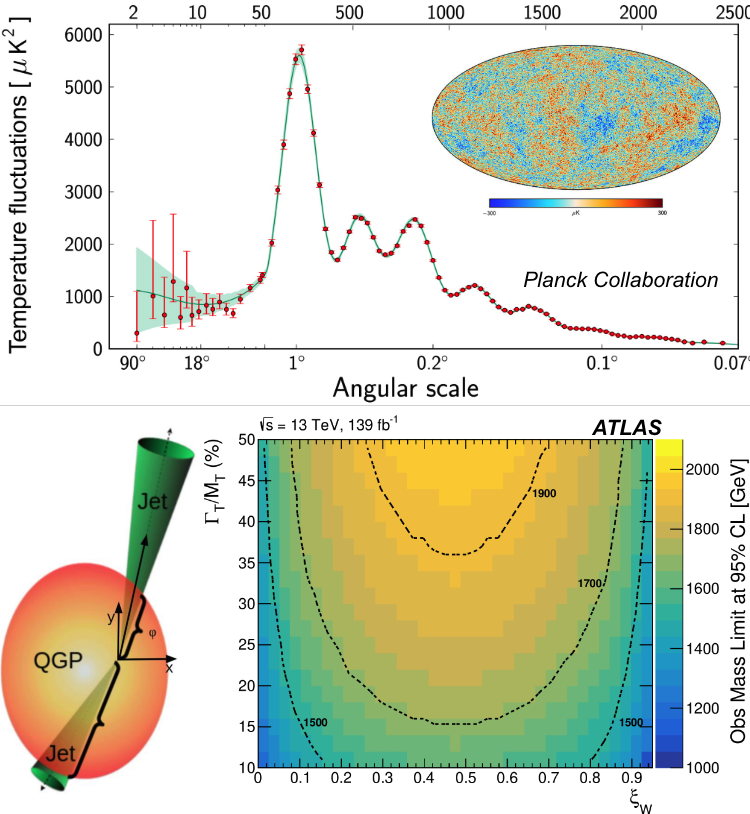

Unfolding#

Response#

Your measurement isn’t perfect. For example:

there is a finite resolution to the detector. Often this will take the place of a Gaussian (or Gaussian-like) smearing around the true value.

your measurement could be systematically off in scale (e.g. your ruler is 0.5cm too long)

you can have an inefficiency in your detector either from the finite apature or some random loss.

These effects all go into the measurement response.

Every measurement suffers from this to some degree. The issue is that experiments with different responses can leave to different measurements.

Mathetmatically: $\(\vec{M} = \bf{R} \vec{T}\)\( If two experiments have different responses \)\bf{R_a}\( and \)\bf{R_b}\( but are measuring the same quantity \)\vec{T}\(, then \)\vec{M_a}\( and \)\vec{M_b}$ will be different.

\(\bf{R}\) is generally a matrix and it can contain:

inefficency of the detector

the inherent resolution of the measurement process

other effects

Determining the response#

The main way in which experimenters determine the response of a measurement is through simulations. Here the simulations model the interaction of a simulated singal (similar to what is expected for the true values) are input into a model of the detector and the expected output is given. This process is a Monte Carlo.

This process can be incredibly detailed and usually requires more computing power than analyzing the data itself. In many parts of physics/medicine, Geant is used. This simulates the passage of particles and radiation through matter based on decades of measurements for specific materials and energies. Other examples are various ray tracking programs for optics. From this, it is possible to determine the response matrix.

Anything requiring a simulation is of course only as good as the simulation itself and all of these tools are imperfect to some degree. Usually experimenters try to do as many checks as possible of the simulation using data itself.

(This process of generating the response from a given model is actually a convolution which we’ll discuss at the end.)

Incorporating the Response into Interpretation#

If the \(\bf{R}\) and \(\vec{M}\) are known then \(\vec{T}\) is constained. There is a choice as to whether to report \(\bf{R}\) and \(\vec{M}\) or to try to constrain \(\vec{T}\) itself. The advantage of reporting \(\bf{R}\) and \(\vec{M}\) is the simplicity. The disadvantage, clearly, is not reporting the actual quantity of interest \(\vec{T}\).

However, getting to \(\vec{T}\) can be a complicated process, as we’ll see below.

The most obvious way to get \(\vec{T}\) is to invert \(\bf{R}\) to get: $\(\bf{R^{-1}}\vec{M} = \bf{R^{-1}}\bf{R} \vec{T}\)\( \)\(\bf{R^{-1}}\vec{M} = \vec{T}\)$

We’ll consider that as a first step, and then discuss unfolding which is a more stable proceedure to get \(\vec{T}\) from \(\bf{R}\) and \(\vec{M}\).

Unfolding#

Unfolding is the process of obtaining \(\vec{T}\) from \(\vec{R}\) and \(\vec{M}\). We’ll first show the issues with the simplest methods and then demonstrate standard unfolding methods. Finally, we’ll discuss approaches which use machine learning to improve unfolding.

We will use pyunfold for the standard unfolding. This package implements a Bayesian method described in this paper which is widely used at the LHC.

Generating a simple dataset and response#

We have two classes of points:

data are those which are from nature

data_true (\(\vec{T}\)) is what one is trying to measure

data_reco (\(\vec{M}\)) is what is measured in the detector

MC samples are necessary to determine \(\vec{R}\)

MC_true is a simulated signal

MC_reco is the MC_true signal ran through your detector model

All of this will be generated here but in a real setup the data would be related to the measurement.

The goal is to know data_true from a measurement of data_reco using information from MC_true and MC_reco.

All of the above are values themselves, when we put them in histograms, we’ll append “_hist” to the name.

For both data_true and MC_true I’ve chosen an exponential function (and the same exponential for both of them). In real life, this would likely be significantly more complicated and it is unlikely that the MC model and the data itself would be drawn from exactly the same underlying distribution.

The data_true and MC_true samples are statistically independent due to different seeds in the random number generator.

I’ve also chosen to make 10 times more MC samples than data samples. If possible it is generally better to have many more MC samples than data samples so that the statistical uncertainties are limited by the data rather than the MC.

sns.set_context(context='poster')

plt.rcParams['figure.figsize'] = (10, 8)

plt.rcParams['lines.markeredgewidth'] = 2

hrange = 5

nbins = 51

num_samples = int(1e4)

MC_true = np.random.exponential(scale=1, size=num_samples)

data_true = np.random.exponential(scale=1, size=10*num_samples)

print(data_true[:5])

print(MC_true[:5])

bins = np.linspace(0, hrange, nbins)

num_bins = len(bins) - 1

data_true_hist, _ = np.histogram(data_true, bins=bins, range=(0,hrange))

MC_true_hist, _ = np.histogram(MC_true, bins=bins, range=[0,hrange])

fig, ax = plt.subplots()

ax.step(bins[:nbins-1],MC_true_hist)

ax.set(xlabel='x value', ylabel='Counts')

ax.legend()

plt.show()

Modeling detector effects#

Now let’s mess up the truth to mimic real detector effects.

Instead of a detailed Monte Carlo in this case we will just add noise via:

a shift in the mean (noise_shift) with a Gaussian smearing (with width noise_width).

There shouldn’t be too many samples where the random_noise would be a negative number but we’ve added an absolute value just to make sure that all of the measured values remain non-negative. This isn’t necessary from an unfolding point of view but it does keep the histograms from having negative x-axis values so it’s just a bit simpler.

This process gives what we think our detector actually measures given these truth samples.

noise_width = 0.2

noise_shift = 1.1

random_noise = np.random.normal(loc=noise_shift, scale=noise_width, size=num_samples)

random_noise = abs(random_noise) # here we're just going to ensure that all elements remain positive

MC_reco = MC_true * random_noise

MC_reco_hist, _ = np.histogram(MC_reco, bins=bins)

fig, ax = plt.subplots()

ax.step(bins[:nbins-1],MC_true_hist, label='MC True')

ax.step(bins[:nbins-1],MC_reco_hist, label='MC Reco')

ax.set(xlabel='x value', ylabel='Counts')

ax.legend()

plt.show()

The MC_reco_hist and MC_true_hist histograms are clearly different but the MC_reco and MC_true should maintain their correlation line by line.

print(MC_reco[:6])

print(MC_true[:6])

Now, we create the response matrix (response_hist). This is a 2D histogram that contains the correlation between each element in MC_true and MC_reco.

Additionally, the pyunfold software requires an efficiency histogram. In our case, we’ll assume perfect efficiency with no uncertainty on the efficiency, so it’s just a histogram of ones and the efficiency error histogram is just a histogram of zeros.

MC_reco_err_hist = np.sqrt(MC_reco_hist)

efficiencies = np.ones_like(MC_reco_hist, dtype=float)

efficiencies

efficiencies_err = np.full_like(efficiencies, 0.0, dtype=float)

efficiencies_err

response_hist, _, _ = np.histogram2d(MC_reco, MC_true, bins=bins) #this is the proper ordering for the axes

response_hist_err = np.sqrt(response_hist)

Here we are going to require each column (truth bin) to sum up to the efficiency value (here we’ve just taken all the efficiencies to be unity so all the columns will add up to one). This is equivalent to saying that every truth value will be measured somewhere.

We can slice up the response matrix to get a look at where the true values come from for a particular measured value.

slice = response_hist[5:6,1:50]

fig, ax = plt.subplots()

ax.step(bins[:nbins-2],np.reshape(slice,49), label='MC True for MC reco bin 5')

ax.set(xlabel='MC true value', ylabel='Counts')

ax.legend()

plt.show()

column_sums = response_hist.sum(axis=0)

normalization_factor = efficiencies / column_sums

response = response_hist * normalization_factor

response_err = response_hist_err * normalization_factor

#checking...

response.sum(axis=0)

inv_response_hist = inv(response)

fig, ax = plt.subplots()

im = ax.imshow(response, origin='lower')

cbar = plt.colorbar(im, label='$P(E_i|C_{\mu})$')

ax.set(xlabel='MC_true bins', ylabel='MC_reco bins',

title='Normalized response matrix')

plt.show()

So now we have the response matrix and it’s inverse so we can try: $\(\bf{R^{-1}}\vec{M} = \vec{T}\)$

Let’s just try this only on the MC_* sample. Can we recover MC_true by this process?

MC_reco_inv = np.matmul(inv_response_hist,MC_reco_hist)

fig, ax = plt.subplots()

ax.step(np.arange(num_bins), MC_true_hist, where='mid', lw=3,

alpha=0.7, label='MC_true')

ax.step(np.arange(num_bins), MC_reco_hist, where='mid', lw=3,

alpha=0.7, label='MC_reco')

ax.step(np.arange(num_bins), MC_reco_inv, where='mid', lw=3,

alpha=0.7, label='from matrix inversion')

ax.set(xlabel='X bins', ylabel='Counts')

ax.legend()

plt.show()

Well that didn’t work well. The green curve looks vaguely like the original distribution but it has far too many fluctuations to be useful in any way. Why? Well, let’s take a look at this inverse matrix. What does it look like when we just let the truth equal the observations? It’s easy to go back and turn the noise down to zero. Seeing that then the inversion works is a trivial but important check.

(Also, run the process of generating the “observed” points that go into the response matrix multiple times; you will see that the inverse of the response matrix changes noticably depending on the particular random number set.)

Finally, the matrix inversion method requires the response to be a square matrix; from the physics point of view, the response can be square, but need not be.

fig, ax = plt.subplots()

im = ax.imshow(inv_response_hist, origin='lower')

cbar = plt.colorbar(im, label='')

ax.set(xlabel='Cause bins', ylabel='Effect bins',

title='Inverse of response matrix')

plt.show()

Unfolding#

So where do we go from here? The basic idea is unfolding. This improves on the matrix inversion by including some additional information. Usually there is a preference for a smooth final distribution. This usually makes sense from a physical point of view but there are some clear dangers here.

We’re going to discuss Iterative Bayesian Unfolding. This is based on Bayes’ Theorem (go back to the beginning when we discussed the basics of probability). This method is documented here. This is used extensively at the LHC, neutrino detectors, DM searches…

Let’s start with Bayes theorem: $\( P(C_i|E) = \frac{P(E|C_i) P(C_i)}{\sum_i P(E|C_i) P(C_i)}\)\( where the \)C_i\( are each independent causes which can produce a single effect \)E\(. Here the conditional probability of cause \)C_i\( to produce the effect \)E\( is \)P(E|C_i)\( and the initial probability of causes is \)P(C_i)$.

One observes some number of effects \(E\), \(n(E)\) and one wants to know the distribution of causes, \(n(C_i)\) that causes those effects: $\(\hat{n}(C_i) = n(E) P(C_i|E) \)\( Most measurements are complicated and procudes a number of effects, so for each effect \)j\(, Bayes theorem applies: \)\(P(C_i|E_j) = \frac{P(E_j|C_i)P_{a}(C_i)}{n(E)}\)\( \)\(P(C_i|E_j) = \frac{P(E_j|C_i)P_{a}(C_i)}{\sum_lP(E_j|C_l)P_a(C_l)}\)$

Given a guess at \(P_{a}(C_i)\) (e.g. the prior) and our understanding from simulation of how the pdf for each effect bin in terms of the cause bin, \(P(E_j|C_i)\), is we can get a constraint on \(P(C_i|E_j)\) and then we have both terms on the rhs of: $\(\hat{n}(C_i) = n(E) P(C_i|E) \)$

Then we iterate. We calcuate \(\hat{n}(C_i)\) based on the intial guess and then use, those values as the new \(P_a(C_i)\). We iterate as many times as necessary until \(\hat{n}(C_i)\) is stable from one iteration to the next (we’ll discuss how to make this qualitative statement quantitative below).

First, let’s just try this on the MC itself and verify that we can recover MC_true_hist by unfolding MC_reco_hist.

We have everything we need for that, except a criteria for stopping the iteration. This is always something that takes time to establish. Here we’ll just set an arbitrary value.

ts_values = [0.0001]

print(ts_values)

unfolded_results = []

closure = []

closure_err = []

for i in range(1):

tmp_results = iterative_unfold(data=MC_reco_hist,

data_err=MC_reco_err_hist,

response=response,

response_err=response_err,

efficiencies=efficiencies,

ts_stopping=ts_values[i],

#ts='rmd',

efficiencies_err=efficiencies_err,

callbacks=[Logger()])

unfolded_results.append(tmp_results)

tmp_closure = tmp_results['unfolded'] / MC_true_hist

tmp_closure_err = tmp_results['sys_err'] / MC_true_hist

closure.append(tmp_closure)

closure_err.append(tmp_closure_err)

print(len(unfolded_results), len(closure_err))

colors = cm.rainbow(np.linspace(0, 1, len(ts_values)))

print(type(colors))

fig, ax = plt.subplots(1,2,figsize=(15, 10))

ax[0].step(np.arange(num_bins), MC_true_hist, where='mid', lw=3,

alpha=0.7, label='True distribution')

ax[0].step(np.arange(num_bins), MC_reco_hist, where='mid', lw=3,

alpha=0.7, label='Observed distribution')

for i in range(len(ts_values)):

ax[0].errorbar(np.arange(num_bins), unfolded_results[i]['unfolded'],

yerr=unfolded_results[i]['sys_err'],

alpha=0.7,

elinewidth=3,

capsize=4,

color=colors[i],

ls='None', marker='.', ms=10,

label='Bayesian unf. dist.')

ax[0].set(xlabel='X bins', ylabel='Counts')

ax[1].errorbar(np.arange(num_bins),closure[i], yerr=closure_err[i], color=colors[i])

ax[1].set(xlabel='X bins', ylabel='unfolded / truth')

plt.legend(loc='lower center')

plt.show()

Priors#

As with anything Bayesian, the selection of the prior central.

Choosing an appropriate prior affects the time to reach a the stopping condition. In PyUnfold, the default prior is a uniform distribution. Depending on the shape of the distribution and the knowledge you have about what the truth should be, this might or might not be an optimal choice.

In the example above, the truth distribution falls like an exponential. There are many cases where one could know that the distribution should be steeply falling like that and that might be a better place to start. Here we’ll set the prior to an exponential but not with the same slope as the real distribution.

alt_prior = np.random.exponential(scale=0.9, size=num_samples)

alt_prior_hist, _ = np.histogram(alt_prior, bins=bins, density=True)

alt_prior_hist /= np.sum(alt_prior_hist)

ts_values = [0.1, 0.01, 0.001, 0.0001]

alt_unfolded_results = []

alt_closure = []

alt_closure_err = []

for i in range(4):

tmp_results = iterative_unfold(data=MC_reco_hist,

data_err=MC_reco_err_hist,

response=response,

response_err=response_err,

efficiencies=efficiencies,

ts_stopping=ts_values[i],

prior=alt_prior_hist,

#ts='rmd',

efficiencies_err=efficiencies_err,

callbacks=[Logger()])

alt_unfolded_results.append(tmp_results)

tmp_closure = tmp_results['unfolded'] / MC_true_hist

tmp_closure_err = tmp_results['sys_err'] / MC_true_hist

alt_closure.append(tmp_closure)

alt_closure_err.append(tmp_closure_err)

colors = cm.rainbow(np.linspace(0, 1, len(ts_values)))

print(type(colors))

fig, ax = plt.subplots(1,2,figsize=(15, 10))

ax[0].step(np.arange(num_bins), MC_true_hist, where='mid', lw=3,

alpha=0.7, label='True distribution')

ax[0].step(np.arange(num_bins), MC_reco_hist, where='mid', lw=3,

alpha=0.7, label='Observed distribution')

for i in range(len(ts_values)):

labelwords = 'unfolded, stop: ' + str(ts_values[i])

ax[0].errorbar(np.arange(num_bins), alt_unfolded_results[i]['unfolded'],

yerr=alt_unfolded_results[i]['sys_err'],

alpha=0.5,

elinewidth=3,

capsize=4,

color=colors[i],

ls='None', marker='.', ms=10,

label=labelwords)

ax[0].set(xlabel='X bins', ylabel='Counts')

ax[1].errorbar(np.arange(num_bins),alt_closure[i], yerr=alt_closure_err[i], color=colors[i])

ax[1].set(xlabel='X bins', ylabel='unfolded / truth')

fig.legend()

plt.show()

It does converge faster in that case which improves the unfolding uncertainties.

Uncertainties#

Statistical uncertainties come from:

uncertainties in the data

limited size of the MC sample In both cases it is usually the case that bootstrapping the statistical uncertainties is the most robust method of determining them.

Systematic uncertainties largely come from the choice of the prior distribution. The choice of how to handle this depends on how much the unfolding is doing (how different are MC_reco and MC_true?) and what is known about the prior.

How to set the stopping condition?#

There is a trade off. The statistical uncertainties (correlated between the unfolded points) grow with every iteration, but unfolding further does allow more time to reach a stable working point. Usually, one takes a minimum in: $\(\sum_{bins} \left(\sigma^2_{stat} + \sigma^2_{conv}\right)\)\( where \)\sigma_{stat}\( is statistical uncertainty from a bin and \)\sigma_{conv}\( is the difference between the unfolded results for \)n\( and \)n-1$ iterations.

In another way of doing it, Pyunfold implements a variety of test statistics to determine the stopping condition.

One wants to iterate as few times as necessary because over-iterating causes large fluctuations/uncertainties.

What we’ve done so far is set up a nice example but we have been unfolding our MC_reco sample with a response matrix generated from MC_reco and MC_true. No where has anything like a real measurement come in.

Here we’ll go back to the data_true sample we generated above and generate data_reco. This sample was generated independently from MC_true and so this is the situation that one would have with a real measurement scenario. We will also generate an independent noise sample (but with the same parameters as above).

random_noise2 = np.random.normal(loc=noise_shift, scale=noise_width, size=num_samples)

data_reco = data_true * random_noise2

data_reco_hist, _ = np.histogram(data_reco, bins=bins)

data_reco_err_hist = np.sqrt(data_reco_hist)

data_unfolded_results = []

data_closure = []

data_closure_err = []

for i in range(len(ts_values)):

tmp_results = iterative_unfold(data=data_reco_hist,

data_err=data_reco_err_hist,

response=response,

response_err=response_err,

efficiencies=efficiencies,

ts_stopping=ts_values[i],

prior=alt_prior_hist,

#ts='rmd',

efficiencies_err=efficiencies_err,

callbacks=[Logger()])

data_unfolded_results.append(tmp_results)

tmp_closure = tmp_results['unfolded'] / data_true_hist

tmp_closure_err = tmp_results['sys_err'] / data_true_hist

data_closure.append(tmp_closure)

data_closure_err.append(tmp_closure_err)

colors = cm.rainbow(np.linspace(0, 1, len(ts_values)))

print(type(colors))

fig, ax = plt.subplots(1,2,figsize=(15, 10))

ax[0].step(np.arange(num_bins), data_true_hist, where='mid', lw=3,

alpha=0.7, label='data True distribution')

ax[0].step(np.arange(num_bins), data_reco_hist, where='mid', lw=3,

alpha=0.7, label='data reco distribution')

for i in range(len(ts_values)):

labelwords = 'unfolded, stop: ' + str(ts_values[i])

ax[0].errorbar(np.arange(num_bins), data_unfolded_results[i]['unfolded'],

yerr=data_unfolded_results[i]['sys_err'],

alpha=0.7,

elinewidth=3,

capsize=4,

color=colors[i],

ls='None', marker='.', ms=10,

label=labelwords)

ax[0].set(xlabel='X bins', ylabel='Counts')

ax[1].errorbar(np.arange(num_bins),data_closure[i], yerr=data_closure_err[i], color=colors[i])

ax[1].set(xlabel='X bins', ylabel='unfolded / truth')

fig.legend()

plt.show()

Here in the red points it’s possible to see the effects of iterating too many times (or setting the stopping condition too tight) because the point to point fluctuations have grown very large.

More than one dimension#

The case above is for a single dimension. Perhaps that is measuring the energy distribution of measured particles. There are a lot of situations where unfolding could be in multiple dimensions:

consider measuring both the position and energy of a particle. If the energy measurement is correlated with the position measurement than a two-dimensional unfolding might be warrented.

consider the measurement of two correlated particles, the measurement of one could impact the measurement of the other

It’s straightforward to conceptualize a single dimensional unfolding. The same procedure works in multiple dimensions, though it becomes more difficult to visualize because the unfolding matrix, \(\vec{R}\) is in \(2N\) dimensions.

Other types of unfolding#

One drawback of the histogram-based Bayesian approach discussed here is the histograms become very large and often sparsely populated in a multi-dimensional unfolding.

Another drawback of this approach is that unfolding can tell you only about distribution level quantities, nothing is sensitive to the details of a particular event.

Approaches using unbinned, machine learning approaches have tried to improve upon both of these concerns. A well-known approach is OmniFold (package) which promises: “We introduce OmniFold, an unfolding method that iteratively reweights a simulated dataset, using machine learning to capitalize on all available information. Our approach is unbinned, works for arbitrarily high-dimensional data, and naturally incorporates information from the full phase space.”. This approach is inspired by issues in high-energy physics but useable in other fields as well.