Statistics#

%matplotlib inline

import matplotlib.pyplot as plt

import seaborn as sns; sns.set()

import numpy as np

import pandas as pd

import os

import subprocess

from random import gauss

import scipy.stats

from scipy.stats import moment

from scipy.stats import poisson

import scipy.stats

from scipy.stats import moment

Data helpers

def wget_data(url):

local_path='./tmp_data'

subprocess.run(["wget", "-nc", "-P", local_path, url])

def locate_data(name, check_exists=True):

local_path='./tmp_data'

path = os.path.join(local_path, name)

if check_exists and not os.path.exists(path):

raise RuxntimeError('No such data file: {}'.format(path))

return path

Get Data#

wget_data('https://courses.physics.illinois.edu/phys398dap/fa2023/data/blobs_data.hf5')

Load Data#

blobs = pd.read_hdf(locate_data('blobs_data.hf5'))

Statistical Methods#

The boundary between probability and statistics is somewhat gray (similar to astronomy and astrophysics). However, you can roughly think of statistics as the applied cousin of probability theory.

Today we’re going to discuss the basics of characterizing a dataset in one or more dimensions using moments. These include things that you are probably reasonably familiar with, like the standard deviation and the mean and others that are probably farther from your experience.

In many areas of science these are important. For example:

Sometimes you just don’t have a model for what a distribution should be and so you just start characterizing it using moments (often starting with the mean and standard deviation).

Other times you have a model, but the data aren’t perfect…maybe you’ve got a lot of background or other noise

in the case of multiple variables, perhaps you want to understand how two (or more) quantities are correlated

Expectation Values#

Given a probability density \(P(x)\), and an arbitrary function \(g(x)\) of the same random variables, the expectation value of \(g\) is defined as:

Note that the result is just a number. Also, \(g\) might have dimensions, which do not need to match those of the probability density \(P\), so \(\langle g\rangle\) generally has dimensions. All of the integrals here are over the entire range that \(P(x)\) is defined, in general that is \(-\infty < x < \infty\), but of course some distributions are defined over a more restrictive range of \(x\); the integration ranges will be left off from here on out just to simplify the equations.

Sometimes the assumed PDF is indicated with a subscript, \(\langle g\rangle_P\), which is helpful, but more often not shown.

Raw Moments

When \(g(x) = x^n\), the resulting expectation value is called a raw moment of x and low order values have names:

\(n=0\), \(\langle g\rangle \equiv \int x^0 dx P(x) \;\) is just unity

\(n=1\) mean of x, \(\overline{x} \equiv \langle x\rangle \equiv \int x dx P(x) \;\).

\(n=2\) root-mean square (RMS) of x, \(\langle x^2\rangle \equiv \int x^2 dx P(x) \;\).

The raw moments can be defined in a similar way for any positive integer power of \(x\).

Central Moments

Additionally, it is useful to define moments centered about the mean of the distribution. These central moments of order \(n\) are defined as above with \(g(x) = \langle (x - \overline{x})^n \rangle\). The \(n=1\) central moment is always 0.

The \(n=2\) central moment is the variance of x, which combines the mean and RMS,

where \(\sigma_x\) is called the standard deviation of x or the width (as we will see the standard deviation of a Gaussian is the width parameter).

Characterization of Measurements

The mean and standard deviations are among the most used quantities in physics. If you know these quantities about a single dimensional dataset, then you actually know quite a lot, regardless of the details of the distribution itself.

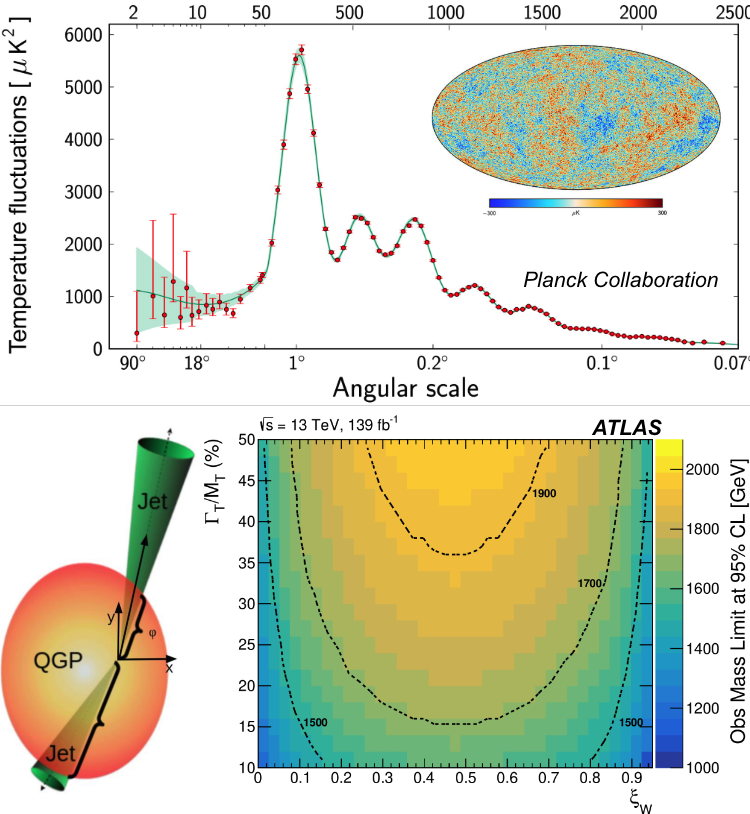

The standard deviation divided by the mean is a usual way of quantifying the precision of a measurement. For example, in high-energy physics, a calorimeter is a device for measuring particle energy.

Here is a paper about a calorimeter built at Illinois showing the mean energy measured as a function of the particle energy and showing the standard deviation of the measured energy distribution divided by the mean: https://arxiv.org/pdf/2003.13685.pdf (Fig. 8).

To go back to the QCD jets from a couple weeks ago, here is a paper showing the mean energy response of the ATLAS calorimeters to jets (the “JES”) and the standard deviation divided by the mean (the “JER”) https://arxiv.org/pdf/2205.00682.pdf (Fig. 1).

Standardized Moments

Finally, standardized moments can be defined. These are central moments normalized by powers of the standard deviation: $\( \Large \tilde{x}_N \equiv \langle\left( x - \overline{x} \right)^n\rangle / \sigma_{x}^n \; , \)$ This makes them scale-invarient and unitless. Thus they provide some information about the shape of the distribution:

\(n = 1\): The first standard moment, \(\tilde{x}_1\) is 0 (just like the \(n=1\) central moment).

\(n = 2\): The second standard moment is 1.

\(n = 3\): skewness The skewness of a distribution can be positive, negative or zero. A distribution that is symmetric about the mean will have zero skewness.

\(n = 4\): kurtosis The kurtosis measures the contributions from the tails of the distribution.

The standard deviation, skewness and kurtosis are useful in that they enable characterization of a distribution without having a specific functional form. In many real life cases this is very useful because there isn’t a clear cut choice for the functional form, because it is useful to not have to resort to fitting to describe a distribution, or because there are various types of background in a distribution.

However, in the majority of cases the standard deviation is far more useful than any of the standarized moments.

Warning: there are a few different definitions of some of these observables running around. Always check before blindly using a piece of code or a formula. scipy.stats moments (https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.moment.html) calculates central moments not the standard moments. You should check that you get the answer you expect for a known test case too.

Some Important Moments of Common Distributions

These are some important moments; this is clearly not a complete list, it’s just ones that are particularily useful to be aware of. For all the distributions, the means and variances are listed and for the Gaussian, a couple more are of interest. The integrals have been written out but not worked through.

Gaussian

Because of its ubiquity, the moments of a Gaussian turn up a lot and are worth keeping on hand.

so the mean is:

obviously.

The second central moment, the variance, is also significant in the Gaussian:

The skewness of a Gaussian should be zero because it is symmetric about the mean: $\( \Large \langle\left( x - \overline{x} \right)^3\rangle / \sigma^3 \; = \frac{1}{\sigma^3}\int dx \frac{\left(x-\mu\right)^3}{{\sigma \sqrt{2\pi}}} \exp\left(-\frac{(x - \mu)^2}{2\sigma^2}\right) \; \)$

The kurtosis of a Gaussian: $\( \Large \langle\left( x - \overline{x} \right)^4\rangle / \sigma^4 \; = \frac{1}{\sigma^4}\int dx \frac{\left(x-\mu\right)^4}{{\sigma \sqrt{2\pi}}} \exp\left(-\frac{(x - \mu)^2}{2\sigma^2}\right) \; \)$

Often a quantity excess kurtosis which is \(\langle\left( x - \overline{x} \right)^4\rangle / \sigma^4 - 3\) is defined. This provides a simple measure of how the tails of the distribution compare to that of a Gaussian, positive values of the excess kurtosis mean the tails are heavier than a Gaussian, whereas negative values mean the tails are weaker than a Gaussian.

Poisson Distribution

The moments can be defined for discrete distributions like the Poisson distribution. Recall the discrete PDF for a Poisson is this: $\(\Large P(k; \lambda) = \frac{e^{-\lambda} \lambda^k}{k!}\)\( and it has a single parameter \)\lambda$.

Since the distribution is discrete, the integral becomes a sum:

The variance is: $\(\Large \langle \left( x - \langle x \rangle \right)^2 \rangle = \sum_{k = 0}^{\infty} \left( k - \lambda\right)^2 \frac{e^{-\lambda} \lambda^k}{k!} = \lambda\)$

Unlike the Gaussian where the mean and variance are independent, in the Poisson they are the same. Often it is interesting to characterize the relative width of a distribution, \(\sigma / \mu\). For a Gaussian, this quantity can be anything because \(\sigma\) and \(\mu\) are independent, but for a Poisson, this quantity is a function of \(\lambda\) only and is always \(1/\sqrt{\lambda}\). The relative width of a Poisson decreases with increasing \(\lambda\).

Uniform Distribution

For the uniform distribution: $\(\Large f(x;a) = \begin{cases} \frac{1}{a} & 0 \leq x \leq a \\ 0 & otherwise \end{cases} \)\( the mean is trivial: \)\langle x \rangle = a / 2$.

The variance is a little more interesting: $\(\Large \langle \left(x- a/2 \right)^2\rangle = \int_{0}^{a} \left(x- a/2 \right)^2 \frac{dx}{a} \;\)\( \)\(\Large \langle \left(x- a/2 \right)^2\rangle = \frac{a^2}{12}.\)$

\(\chi^2\) Distribution

Recall the \(\chi^2\) distribution: $\(\Large f(x;n) = \frac{1}{{2^{n/2}\Gamma(n/2)}} x^{(n/2)-1} e^{-x/2}\)$

This distribution shows up in fitting distributions (which we’ll see in Week 6), and it’s worth understanding the mean and variance of this distribution. Here the mean: $\(\Large \langle x\rangle = \int dx \frac{x}{{2^{n/2}\Gamma(n/2)}} x^{(n/2)-1} e^{-x/2}\;\)$

The \(\chi^2\) distribution has the same qualitative feature as the Poisson distribution in that the relative width is proportional to \(1/\sqrt{\mu}\) (though in this case there is a factor of \(1/\sqrt{2}\) as well. We’ll see this has consequences in fitting….

Briet-Wigner Distribution

And finally the Breit-Wigner distribution. Recall the PDF is: $\(\Large P(x; \Gamma, x_0) = \frac{1}{\pi}\frac{\Gamma}{(x - x_0)^2 + \Gamma^2}.\)$

The mean would be: $\(\Large \Large \langle x\rangle = \int dx \frac{x}{\pi}\frac{\Gamma}{(x - x_0)^2 + \Gamma^2}.\)$

However, this integral is divergent over the range \(-\infty \to 0\) and the range \(0 \to \infty\) so the mean is not defined. The same is true for the variance and other higher moments.

Moments from a Dataset

The moments discussed above are properties of analytic probability distributions. That’s good to know but a finite dataset is never as well known as the analytic distributions. Of course a dataset might not obey any of the probability distributions discussed here anyway.

Here we’ll go over how to get at the moments of some datasets and discuss a bit about how they are actually used in real situations.

blobs.describe()

np.mean(blobs, axis=0)

np.var(blobs, axis=0)

np.std(blobs, axis=0)

Just a little be of cross checking to make sure everything is doing what we expect.

assert np.allclose(np.std(blobs, axis=0) ** 2, np.var(blobs, axis=0))

assert np.allclose(

np.mean((blobs - np.mean(blobs, axis=0)) ** 2, axis=0),

np.var(blobs, axis=0))

ntries = 1000

gaussarray_wide = np.array([])

gaussarray_narrow = np.array([])

for _ in range(ntries):

value = gauss(0, 0.2)

gaussarray_narrow = np.append(gaussarray_narrow,value)

for _ in range(ntries):

value = gauss(0, 1)

gaussarray_wide = np.append(gaussarray_wide,value)

fig, (ax1, ax2) = plt.subplots(1, 2)

fig.suptitle('wide and narrow gaussians')

ax1.hist(gaussarray_wide, bins=40, range=[-5,5])

ax2.hist(gaussarray_narrow, bins=40, range=[-5,5])

print("hi!")

ntries = 1000

gaussarray = np.array([])

for _ in range(ntries):

value = gauss(0, 2)

gaussarray = np.append(gaussarray,value)

plt.hist(gaussarray)

print("Gaussian")

print("first central moment", moment(gaussarray, moment=1))

print("variance", moment(gaussarray, moment=2))

print("skewness", moment(gaussarray, moment=3)/(moment(gaussarray,moment=2))**1.5)

print("kurtosis", moment(gaussarray, moment=4)/(moment(gaussarray,moment=2))**2)

When calculating selected moments for random numbers generated for a from a given distribution, the numbers we get are close but not identical to the theoretical values calculated above. We’ll discuss how close these should be to the ideal case further down.

ntries = 1000

bwarray = np.array([])

for _ in range(ntries):

value = np.random.standard_cauchy(1)

bwarray = np.append(bwarray,value)

plt.hist(bwarray)

print("Breit-Wigner (Cauchy)")

print("first central moment", moment(bwarray, moment=1))

print("variance", moment(bwarray, moment=2))

print("skewness", moment(bwarray, moment=3)/(moment(bwarray,moment=2))**1.5)

print("kurtosis", moment(bwarray, moment=4)/(moment(bwarray,moment=2))**2)

ntries = 1000

uarray = np.array([])

for _ in range(ntries):

value = np.random.uniform()

uarray = np.append(uarray,value)

plt.hist(uarray)

print("Uniform")

print("first central moment", moment(uarray, moment=1))

print("variance", moment(uarray, moment=2))

print(1/12)

Back to an Arbitrary Dataset

#always check the documentation to make sure the definitions are the same

print("the first central moment ought to be 0:", moment(blobs, moment=1))

print("the second central moment", moment(blobs, moment=2))

print("third central moment", moment(blobs, moment=3))

print("fourth central moment", moment(blobs, moment=4))

plt.hist(blobs['x2'])

Moments for a Dataset with Unknown Shape

For the blobs dataset, the results might not be particularly illuminating, but the higher order moments can be particularly useful when you are trying to compare datasets for which you don’t have a model (e.g. you don’t know the dataset should be Gaussian or Poisson or …) or you would just like to make a statement independent of models.

Here is an example from the LHC. In this case the ALICE collaobration measured the average momentum of all the particles produced in a single collision between two lead nuclei. They then plotted the distribution of this average from a large collection of collisions (plot on the left, where the different sets of points are different classes of events) https://arxiv.org/pdf/2308.16217 . You can see that even within event classes there is a distribution of the average momentum of the particles in the separate collisions.

They then calculate the skewness and kurtosis of these distributions. Additionally, these same quantities can also be calculated using theoretical models (shown as the lines on the middle and right panels). Neither the data nor the models are well described by Gaussians (though the data does become more Gaussian-like moving toward the right on the skewness and kurtosis plots).

Mixed Moments

In addition to the moments of a single variable, for multivariate distributions, mixed-moments can be defined. As an example, take the equation above but now let \(g = g(x,y) = x^ny^m\):

Then \(\langle g\rangle\) would be the raw moment of order \(n\) of x and \(m\) of y. Central and standard mixed moments can be defined analogously to those for one variable above.

For example the central moment of order \(n\) of x and \(m\) of y would be \(g(x,y) = (x-\bar{x})^n(y-\bar{y})^m\) This can be generalized to an aritbrary number of variables.

This can seem esoteric, but for two variables and \(m=n=1\), we will define the correlation and covariance below that is a key concept for interpreting data and understanding how two quantities are related to each other.

Covariance

If you have more than one variable, a key question is whether having more than one variable providing new information or are the variables just repeating the same information? Here will will introduce covariance and correlation.

The covariance between x and y is defined as: $\( \Large \text{Cov}_{xy} \equiv \langle\left( x - \overline{x}\right) \left( y - \overline{y}\right)\rangle \; . \)$

A useful combination of the correlation and variances is the correlation coefficient,

which, by construction, must be in the range \([-1, +1]\).

We say that the variance and correlation are both second-order moments since they are expectation values of second-degree polynomials.

We call the random variables \(x\) and \(y\) uncorrelated when:

To obtain an empirical estimate of the quantities above derived from your data, use the corresponding numpy functions, as we’ll see below.

Covariance Matrix

It is useful to construct the covariance matrix \(C\) with elements:

With more than 2 random variables, \(x_0, x_1, \ldots\), this matrix generalizes to:

Comparing with the definitions above, we find variances on the diagonal:

and symmetric correlations on the off-diagonals:

(The covariance is not only symmetric but also positive definite).

Here we can take a look at the covariance matrix for two variables in the blobs dataset.

plt.scatter(blobs['x0'], blobs['x1'], s=10)

np.corrcoef(blobs['x0'], blobs['x1'])

Uncorrelated versus Independent

It is tempting to think that if two variables are uncorrelated, they are independent. However that is not the case.

Let’s take the simple case two random variables X and Y where \(X = x\) and \(Y = x^2\). Clearly these two variables are not independent. If you know any value of x, you can determine y and vise versa. Let’s use the machinary from last week to calculate the correlation coefficient with x sampled from a Guassian centered at \(x=0\)

ntries = 10000

gaussarray = np.array([])

gaussarray_sq = np.array([])

for _ in range(ntries):

value = gauss(0, 2)

if value > -100:

gaussarray = np.append(gaussarray,value)

gaussarray_sq = np.append(gaussarray_sq,value*value)

print(np.corrcoef(gaussarray, gaussarray_sq))

plt.scatter(gaussarray, gaussarray_sq, s=10)

The plot looks sensible, but the correlation coefficent is approximately zero. Running more events isn’t going to give us the strong correlation we might expect. We can clearly see that given a value of X, Y is uniquely determined.

Let’s take a look at this mathematically for our case of \(X = x\) and \(Y = x^2\) with x being symmetric around the origin. In that case: $\( \bar{X} = 0 \\ \bar{Y} = \langle x^2 \rangle \)\( plugging in what we know: \)\( \bar{Y} = \sigma^2_x \)\( so the correlation between X and Y is: \)\(\text{Cov}_{xy} = \langle\left( x\right) \left( x^2 - \overline{x^2}\right)\rangle \\ = \langle (x\left( x^2 - \sigma^2\right)\rangle \\ =\langle x^3 - x^2\langle x\rangle \rangle = 0. \)$

This is true because the function is symmetric about \(x=0\). Let’s look at the same Gaussian centered at the origin, but only with the requirement that \(x>0\).

ntries = 10000

gaussarray = np.array([])

gaussarray_sq = np.array([])

for _ in range(ntries):

value = gauss(0, 2)

if value > -0:

gaussarray = np.append(gaussarray,value)

gaussarray_sq = np.append(gaussarray_sq,value*value)

print(np.corrcoef(gaussarray, gaussarray_sq))

plt.scatter(gaussarray, gaussarray_sq, s=10)

We have seen that if two variables are uncorrelated they are not necessarily independent. However, if two variables are independent then they are uncorrelated. This means you can’t necessarily directly take the correlation between two variables as telling you how much extra information is being provided by the second quantity.

If we just had the datasets themselves and didn’t know the \(Y = x^2\) relationship set out above, we might calculate the correlation coefficient and think that the two variables are independent. The plots however make clear that if you know \(x\) you directly know \(Y\).

Other Correlation Measures

\(\rho_{xy}\) is known as the Pearson correlation coeffient and is sensitive to the degree of linear correlation and is very common in physics (just google it).

There are other measures of the correlation, e.g. Spearman’s \(\rho\) and other rank correlation measures.