Uncertainties on the Mean#

import numpy as np

%matplotlib inline

import matplotlib.pyplot as plt

import seaborn as sns; sns.set()

import numpy as np

import pandas as pd

from random import gauss

import scipy.stats

from scipy.stats import moment

from scipy.stats import poisson

Given a dataset of a finite size, there are uncertainties on any of the associated moments. We’ll discuss the special case of counting experiments using the Poisson distribution. We’re going to focus on the uncertainty on the mean.

Last week we discussed:

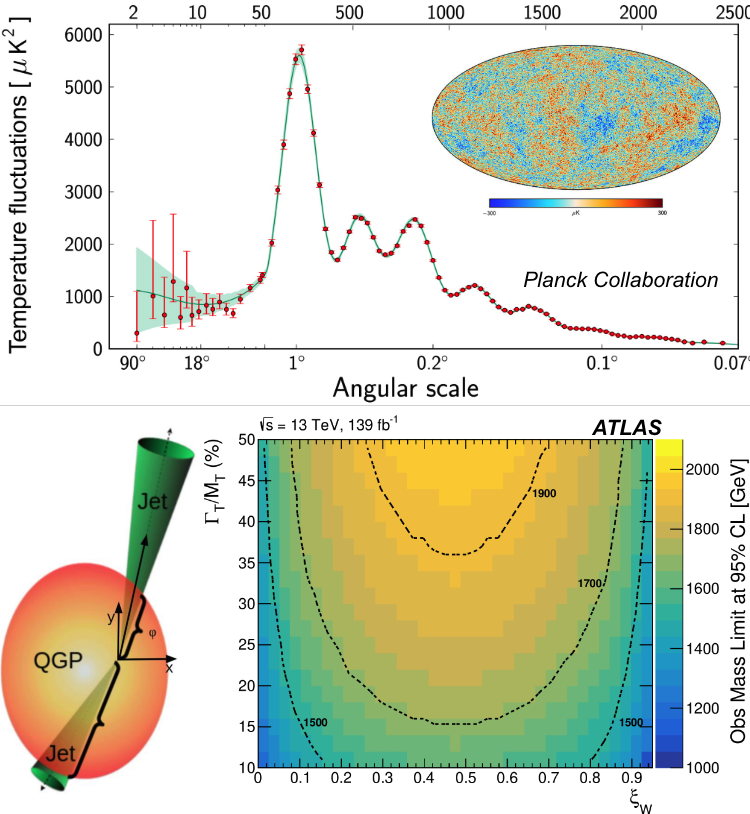

The Poisson distribution is a useful model for describing the statistics of event-counting rates in (uncorrelated) counting measurements (which are ubiquitous in astronomy and particle physics).

It’s useful to note that the Poisson distribution is defined for integer values of \(k\), but \(\lambda\) of course doesn’t need to be an integer.

As we discussed in the last notebook \(\lambda\) is both the mean and the variance of the Poisson distribution. Thus the relative width of the Poisson distribution goes as \(1/\sqrt{\lambda}\).

mu = 1.5

rv = poisson(mu)

R = poisson.rvs(mu, size=1000)

highval = np.max(R)

binedge = np.linspace(-0.5, highval+0.5, highval+2)

#this binning definition makes each bin have a width of 1 and has the bin centers be integers

plt.hist(R,bins = binedge)

print("mean: ", np.mean(R), " & variance: ", np.var(R))

#note with a Poisson, the mean doesn't have to be an integer even though the distribution itself only generates integers

This means that the relative uncertainty on the mean of a counting experiment is \(1/\sqrt{\lambda}\). That means

that for a counting experiment the uncertainty on the mean

becomes smaller with \(1/\sqrt{n}\). This is really fundamental to much of experimental physics.

If you want 10% precision you need:

$\(1/\sqrt{N} = 0.1\\

N=100\)\(

if you want 1% precision you need:

\)\(1/\sqrt{N} = 0.01\\

N=10000\)$

Precision improves quickly at first as you add data, but then much more slowly.

Counting Experiments

So what is a counting experiment where the Poisson is so useful?

A classic example is counting radioactive decays. Let’s say you have a sample of Americium-241 (this is the isotope used in smoke detectors). You want to know how many decays per second are measured. It doesn’t make sense to just measure for one second because then you won’t get very many decays and it’s tough to measure the one second. You decide to measure for 1 minute (60 seconds) and divide the total rate by 60 seconds to get the rate per second.

Over the 60 seconds you measure 1594 decays. Then the best estimate for the mean of the Poisson distribution for the number of decays in 60 seconds is 1594. How much variation would you expect if you did this measurement again. It seems unreasonable that you would get exactly 1594 again but how do you understand the uncertainty on your measurement?

Well we know this is a Poisson distribution and now \(\lambda = 1594\), the variance is also 1594 and the standard deviation of the Poisson is \(\sqrt{\lambda} = 40\).

Thinking of the relative width from the last notebook, \(1/\sqrt{\lambda} = 0.03\). The overall precision of the measurement is about 3%.

We weren’t interested in the decay rate per minute, we wanted the decays per second. The best estimate of that is:

decays / second = \(1594 / 60 s = 26.56 / s\)

3% of \(26.56 / s\) is \(0.8 /s\)

so our measurement is this sample produces 26.6 \(\pm\) 0.8 decays / second.

You have a very distractable colleague who cannot imagine running a counting measurement for a whole minute. They decide to make the same measurement but only for 5 seconds. Over 5 seconds they measure 134 decays. There we havee \(\lambda = 134\) and \(1/\sqrt{\lambda} = 0.08\). Following the above this measurement is 26 \(\pm\) 2 decays / second.

Finally, you have a colleague who gets engrossed in a book while the experiment is running and takes data for 10 minutes. They measure 16,258 decays for 27.1 \(\pm\) 0.2 decays / second.

A few thoughts about the above example:

increasing the number of counts in a sample is what decreases the uncertainty, often that just means running the experiment for a longer amount of time.

the reason that is the case is because the relative width (uncertainty) is only a function of the total number of counts that you have, not the absolute rate.

doubling the number of counts decreases the uncertainty by \(1/\sqrt{2} = 0.707\). In the beginning doubling is easy and eventually it becomes hard…

Uncertainty Evaluation by Sampling

The analytic formulas for uncertainty evaluation are extremely useful but in many cases, using the data itself to evaluate the uncertainties is useful. These techniques go by names like subsampling and bootstrapping and they are useful for datasets that involve complicated calculations (making direct propagation difficult) or the analytic techniques don’t work for some reason.

This seems like a scam, but it works as we’ll see below. Basically this replaces the analytic calculations we worked through with computing power (to generate the bootstraps as well see below).

rng = np.random.default_rng()

from scipy.stats import norm

dist = norm(loc=2, scale=4) # let's just start with a Gaussian

#making 100 random values

data = dist.rvs(size=100, random_state=rng)

plt.hist(data)

For these 100 values we would expect to know the mean to the level of \(4/\sqrt{100} = 0.4\) (think of the lecture slides) and we don’t have an estimate of how precisely we should know the standard deviation.

print("mean: ",np.mean(data))

print("standard dev: ",np.std(data))

The deviation of the sample mean from the expected value checks out with our estimate.

from scipy.stats import bootstrap

print(type(data))

data_tuple = (data,) # samples must be in a sequence

print(type(data_tuple))

The bootstrapping code has an argument confidence_interval. The uncertainties we have generally talked about are “1-\(\sigma\)” uncertainties which correspond to a 68% confidence interval. In many places in statistics a higher confidence level is used, often 90% or 95%.

Be aware of what the default value is for any code that you use. That’s an often silent way to get results you do not understand.

res = bootstrap(data_tuple, np.std, n_resamples = 100, confidence_level=0.68, random_state=123)

fig, ax = plt.subplots()

ax.hist(res.bootstrap_distribution, bins=25)

#res.bootstrap_distribution is an nd array with a size of n_resamples

ax.set_title('Bootstrap Distribution')

ax.set_xlabel('statistic value')

ax.set_ylabel('frequency')

plt.show()

print(type(res))

print(res.standard_error)

res.bootstrap_distribution.shape

This is the distribution of the standard deviation value of the resampled distributions. There is one entry in this histogram for each n_resample. For each resample, the software pulls the same number of random values according to the original distribution. This resampled distribution will have 100 entries (in this case) just like the original distribution but it will not be identical to the original distribution (just like drawing 100 random numbers according to a Gaussian distribution isn’t exactly a Gaussian distribution). The mean of this resampled distribution is evaluated. The standard deviation of the distribution of means of the resampled distribution is the uncertainty on the mean.

Chosing n_resample depends on computational limits on the high end and math on the low end. Obviously a generating only a few resamplings is not going to be useful or provide an accurate representation of the uncertainty (note the default value of n_resample is 9999 so beware with large datasets). Typical values range from 100-1000 but depend on a lot of factors.

In the example above, we are only calculating the standard deviation of a distribution and so perhaps the bootstrap is overkill.

Let’s try something a little more complicated….

Here we’ll take the data from before and square each element (it’s simpler to go back to the original numpy array and square the elements there then handle the type conversation to what the bootstrapping wants).

data2 = data**2

data2.shape

plt.hist(data2)

data2_tuple = (data2,)

res = bootstrap(data2_tuple, np.std, n_resamples = 300, confidence_level=0.68, random_state=123)

fig, ax = plt.subplots()

ax.hist(res.bootstrap_distribution, bins=25)

#res.bootstrap_distribution is an nd array with a size of n_resamples

ax.set_title('Bootstrap Distribution')

ax.set_xlabel('statistic value')

ax.set_ylabel('frequency')

plt.show()

print(type(res))

print(res.standard_error)

Bootstrapping is a part of a collection of techniques used in sampling. They are all modern because they rely on having computational power.

One of the earliest forms of resampling is jackknife. Here the N points are divided into N samples of size N-1 to understand the variation and look for biases. Another one of these techniques is subsampling. There the dataset is randomly divided into M samples of the same size. For each sample the mean is evaluated. The uncertainty on the mean can then be evaluated using standard techniques for evaluating the uncertainty on the mean (discussed in the slides).

The bootstrap is used heavily in modern physics because it is so straightforward even for complicated quantities. Here is a document describing the use of this technique in ATLAS.